Seeing Is Believing: How Cognitive Bias Impacts Safety Decision-Making

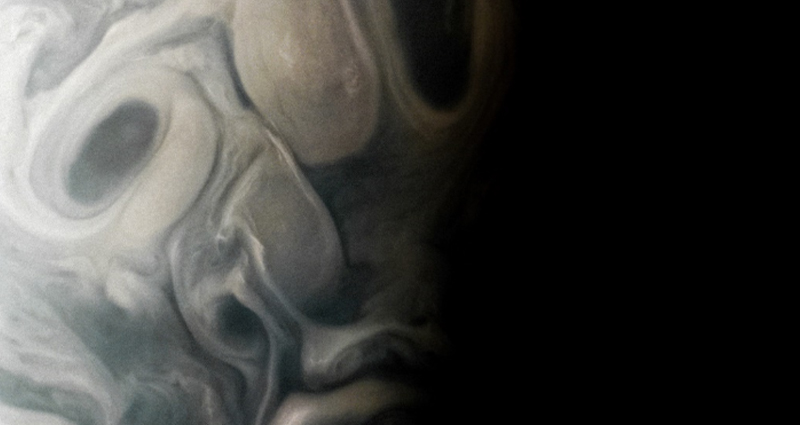

Take a look at the image above. A long-lost Picasso? A ceremonial mask from an aboriginal jungle tribe? Nope. This is a close-up photo of part of Jupiter taken by the NASA Juno spacecraft on 7 September 2023.

The remarkable similarity to a human face is a case of what scientists call pareidolia: the false perception of a non-existent pattern in everyday objects. The whimsical pastime of lying on your back in the park and finding patterns in clouds is another example of pareidolia.

In safety management, we often see a phenomenon that is related to and yet the opposite of pareidolia. Instead of seeing something that is not there, people fail to see something that is right in front of their noses due to cognitive bias. One type of cognitive bias is called “neglect of probability.”

An example will help illustrate this all-too-human tendency. Imagine the Mega Millions Jackpot is $1 billion this week. It is well known that the probability of winning is about 1 in 303 million. And yet, many people will go out of their way to buy lottery tickets. They are setting aside the knowledge of the low probability with eyes glazed over in hopeful anticipation of their winnings.

Neglect of probability occurs when we become enamored by the value of the outcome while failing to appreciate the low likelihood of that outcome actually coming about. The people in line to purchase a Mega Millions lottery ticket have deliberately set aside logic and reason. But a neglect of probability can lead to cognitive bias even when we do not fully appreciate the low odds.

Cognitive Bias in Action

Let’s say you have completed a flight risk assessment and determined that the risk of a safety incident is 0.2%. That is nearly zero, right? It is possible that you will choose to go about your day in exactly the same way as if the risk was indeed zero. But it isn’t zero. In fact, probability theory tells us that if the flight duty with a 0.2% safety risk were operated each day for a year, the cumulative chances of an incident occurring would jump to over 50%. It has become more likely than not!

Neglecting probability is a form of cognitive bias that can lead to poor decisions. And the risk of poor decisions is elevated when we have a strong preference for one outcome that has a low probability of occurring. In the example above, completing the flight duty period day after day for years without incident is the preferred outcome. The low probability today becomes a high probability in the aggregate. How to avoid falling into the trap?

Taking a Rigorous Approach to Safety

The best way to manage safety while minimizing the impact of human tendencies such as cognitive bias is to follow a set of policies and procedures specifically designed to work with—not against—probability considerations.

At Pulsar, we use a framework known as a comprehensive fatigue risk management program (FRMP) to address fatigue risk. A similar rigorous approach may be taken with operational risk management procedures addressing other specific risks, such as terrain and weather.

To learn more about fatigue risk management for your organization, contact us at Pulsar. We can analyze your existing schedules and provide a Fatigue Snapshot of retrospective as-flown operations. This will serve as a baseline for the development of policies and procedures that aren’t pareidolia.

Pulsar Informatics is an IS-BAO I3SA certified company specializing in systems that help organizations reduce fatigue-related risk and achieve peak performance. Fleet Insight enables safety managers and schedulers to proactively evaluate fatigue across their entire operation’s schedule and formulate mitigation strategies. Fatigue Meter Pro Planner is used by pilots, flight attendants, and maintenance personnel to evaluate their individual flight and duty schedule.

http://www.pulsarinformatics.com

© 2025 Pulsar Informatics, Inc.. All Rights Reserved.

Next ArticleRelated Posts

Part 108: The Next Step in BVLOS Integration and Drone Innovation

As the drone industry awaits the Federal Aviation Administration’s (FAA) forthcoming Part 108 regulations, the landscape of Beyond Visual Line of Sight (BVLOS) operations stands on the brink of transformation. These anticipated rules aim to standardize BVLOS flights, enabling more complex and expansive drone missions across various sectors.

Navigating Geopolitical Uncertainty Using Business Aviation

Bigger business jets mean bigger fuel tanks, longer trips, more border crossings, and bigger wallets! With an equipment upgrade also comes the requirement for a knowledge upgrade.